[Disclaimer: you’ll probably see ads under and possibly incorporated into articles on this blog. I don’t choose them and I don’t approve them: that’s the price I pay for not being able to afford to pay for all my blogs…]

I have, at best, an uneasy relationship with Facebook. To paraphrase something that I’m writing at the moment (more about that shortly):

I first subscribed to Facebook because I was working in IT security research and needed to find out more about it, so I signed up to see how it worked from a user’s point of view. However, friends and colleagues in the security industry – who may well have signed up for similar reasons – quickly found me there and invited me to befriend them, and why wouldn’t I? Then relatives and friends from outside the security industry also sent me invitations, and it would have been churlish to ignore them. Having been partially assimilated I found myself looking for people I knew, especially those I’d lost touch with and with whom I hoped to resume contact. Several years on, I followed various groups and pages aligned with my own interests and activities. So yes, I’m currently willing to accept the trade-off between the social advantages and Facebook’s unwelcome intrusions.

That doesn’t mean, of course, that I’ve resisted the urge to write about Facebook, its shortcomings, and those who take advantage of them: in fact, FB and other social media platforms have supplied me with much blogging material (and hypertension) over the years, to the point where I’ve recently felt obliged to upcycle some of that material into a book project. (If that sounds interesting, you can probably assume that if it’s ever completed, it will be announced on this blog at some point.) I’d already mentioned the whistleblower Frances Haugen in the first draft when I learned that she’d written about her experiences in a book originally called The Power of One: How I Found the Strength to Tell the Truth and Why I Blew the Whistle on Facebook (Little, Brown and Company: published in the UK in 2023 by Hodder and Stoughton as The Power of One – Blowing the Whistle on Facebook). So, naturally, I had to read it.

The first thing to say is that this book has no direct connection that I can see with the 1989 novel The Power of One by Bryce Courtenay, or the slightly later film adaptation. Frances Haugen is best known (and to many of us only known) for having disclosed the contents of 22,000 pages of internal Facebook documents to the Wall Street Journal:

https://www.wsj.com/articles/the-facebook-files-11631713039

Subsequently, she revealed her own identity in September 2021, ahead of an interview on 60 Minutes.

https://www.nbcnews.com/tech/social-media/facebook-whistleblower-reveals-identity-accuses-platform-betrayal-democracy-n1280668

Additionally, she has testified before or otherwise engaged with a number of bodies in the US, Europe and the UK. These included a sub-committee of the US Senate Commerce Committee, the Securities and Exchange Commission, the UK Parliament, and the European Parliament. I’m not always the biggest fan of Wikipedia as a source of accurate information, but there seem to be quite a few useful supporting links here:

https://en.wikipedia.org/wiki/Frances_Haugen

The next thing to say is that this is absolutely not a technical guide to defending your privacy and security from Facebook/Meta, its sponsors, or its abusers, though if you happen to believe that Facebook is an example of all being for the best in the best of all possible Metaverses, the doubts that reading this book might raise may well lead to your wanting to find ways to improve your safety on Facebook and in social media in general. Without commenting on the accuracy of individual claims, I think that’s a Good Thing. But if you aren’t already gifted with a reasonable amount of healthy scepticism, I suppose you probably won’t be reading the book, let alone my less-than-famous blog. As for accuracy: much of what Haugen says and what others have said about her makes a lot of sense to me, as a long-time Facebook watcher and commentator, but I haven’t ploughed through the Facebook Files myself and am not likely to. If I did, I wouldn’t have the resources to verify everything.

The third point to make is that while Haugen makes good points about the need for increased responsibility, transparency, and accountability in social media, this is not an exhaustive guide to ‘fixing’ Meta, let alone other platforms. Judging from her frequent interaction with governmental bodies, she is content to provide information from which they can draw conclusions to drive their future policies and legislation, not push a policy agenda of her own. As she herself writes:

‘Any plan to move forward that’s premised on me personally proposing the solution is a plan that’s doomed to fall short. The “problem” with social media is not a specific feature or a set of design choices. The larger problem is that Facebook is allowed to operate in the dark.’

Elsewhere, she writes about the European Union’s Digital Services Act that:

‘I like to think of laws like the DSA as nutrition labels. In the United States the government does not tell you what you can put in your mouth at dinnertime, but it does require that food producers provide you with accurate information about what you’re eating.’

In fact, the book is by no means focused entirely on the exposure of Facebook. While it begins with Haugen’s presence at President Biden’s first State of the Union address, earning an individual citation as ‘the Facebook whistleblower’, a very large proportion of the subsequent chapters trace the steps that led her to Facebook and beyond from ‘When I Was Young in Iowa’, through Junior High, the Franklin W. Olin College of Engineering and MIT, Google, Harvard Business School, Pinterest, and so on. We hear about her issues with coeliac disease, divorce, victimization by sexist fellow-students, and other negative issues. We don’t, perhaps, need to know about these issues in order to assess the importance of her assertions and allegations, but they’re clearly important to her, and to our understanding of what drives her. (And perhaps even in response to pushback from Facebook?)

What are those assertions and allegations? Well, in general terms, she evidently sees herself as having been ‘a voice from inside Facebook who could authoritatively connect the company’s pernicious algorithms and lies to its corporate culture … [without which] Facebook’s gaslighting and lies might still prevail.’

We’ve been told in recent years that she filed a large number of complaints against Facebook with the Securities and Exchange Commission (at least eight) ‘alleging that the company is hiding research about its shortcomings from investors and the public’, but I was unable to find a direct reference to those complaints in the book.

https://edition.cnn.com/2021/10/03/tech/facebook-whistleblower-60-minutes/index.html

In her statement to the Senate Subcommittee on Consumer Protection, Product Safety, and Data Security, however, she claimed that Facebook’s products “harm children, stoke division and weaken our democracy” and prioritize profit rather than moral responsibility.

https://edition.cnn.com/business/live-news/facebook-senate-hearing-10-05-21/index.html

In the book she touches on a great many issues of concern, including:

- The rise and fall of the Civic Integrity team ‘spun up’ in the wake of the 2016 US election, with its subsequent defanging and dispersal.

- The Macedonian misinformation model (1. Build a ‘news’ site 2. Add political articles 3. Post links back from a Facebook page 4. ‘Watch the [Google] AdSense dollars roll in.’

- Reluctance to reactivate ‘Break The Glass’ measures after the 2020 election, such as requiring a group with a score of hate speech strikes above a certain limit to apply moderation. Haugen clearly links the January 6th actions and ‘Stop The Steal’ to the absence of such ‘friction-adding’ measures.

- Recognition of and inadequate handling of ‘Adversarial Harmful Movements’.

- Refusal to share even basic data relating to inconvenient research.

- Cambridge Analytica data capture as facilitated by Facebook. Cambridge Analytica doesn’t get a lot of wordage in the book, but Haugen does remind us that Facebook was fined $5 billion in 2019 for misleading the public on how much data could be accessed by developer APIs.

- The effective caps on the number of fact-checking articles commissioned from Facebook’s partners and, crucially, paid for. (Later addressed by the BBC here: https://www.bbc.co.uk/news/technology-47779782).

- The trade-off between ‘short-term concrete costs’ and the long-term hypothetical risks of an expensive fiasco like the Cambridge Analytica disaster.

These are issues that deserve and need wider exposure and discussion, and that’s why Haugen’s book is important, even though it’s not always well-written: after all, we don’t all have access to the detailed information given to governmental bodies.

Here’s a specific issue about the quality of the writing that caused me to grind my teeth quite a lot. There’s an inconsistency here in the way jargon is addressed. Early in the book, Haugen makes the occasional attempt to clarify coding/algorithmic concepts, even such basics as importing a library. (Though I have a certain amount of empathy with the story of how she was told she needed more instruction on modern software engineering: I went through a similar episode many years ago, when I was told by my manager that my (actually functional, but not necessarily elegant) C code was impenetrable…)

Unfortunately, however, she happily includes many examples of unexplained MBAspeak. Having spent some of the last few years of my working life providing consultancy services to North American companies, I’m not unfamiliar with some of the staples of business communications, and am fully prepared to reach out and circle the wagons in pursuit of an appropriate blue-skying box to think outside. (If Dilbert hadn’t already been invented, he would have had to exist.) Still, I’m (not very) grateful to have been introduced to some new ones (that is, I had to resort to a search engine to find out what they meant in the context in which they were used).

- ‘Hockey sticking’ describes a fairly flat line on a graph that suddenly shows a dramatic upward turn like a hockey stick handle.

- ‘Single-player mode’ is when someone on social media posts more than they read.

- ‘Red ocean’ is a Harvard Business School concept – it describes similarly-qualified ‘sharks’ competing in a blood-filled ocean.

But my favourite is when she complains of having been put in ‘an awkward onboarding position.’ I have a feeling I’ll be borrowing that one.

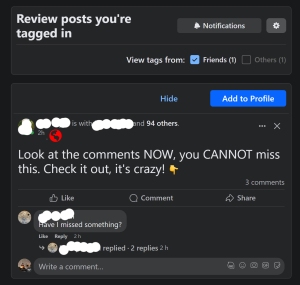

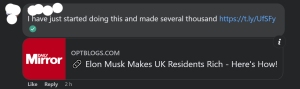

To be fair, the photo below illustrates a semi-meaningless cliché that I didn’t see in Haugen’s book, but I’m sure you know the one, and might enjoy this take on it.

Perhaps it’s unfair to make such a big deal out of this authoring blemish, but it does make me wonder for whom exactly she’s writing. Not, perhaps, a wide audience, so much as other corporate techies, executives, politicians and other policy makers, influencers and, most of all, potential whistleblowers – at any rate, people who might be concerned enough at about the age of corporate-driven AI and the amoral algorithm, to do their best to apply brakes. And if she reaches such people, she deserves applause as much for that as for what she has told us about one specific and particularly problematic social media platform.

https://edition.cnn.com/2021/10/06/tech/facebook-frances-haugen-testimony/index.html